Published Feb 6, 2025

Concurrency best practices for large data volumes

In an iPaaS, concurrency is the platform’s ability to execute multiple tasks, processes, or workflows simultaneously. This enables integrations to run in parallel, efficiently managing overlapping operations such as concurrent API calls and large data transfers. By dividing workloads into smaller, parallel units, concurrency enhances performance, speeds up processing, and optimizes resource utilization.

Think of concurrency like a highway system. Without it, data moves along a single-lane road, forcing each task to wait for the one ahead, leading to slowdowns and bottlenecks. With concurrency, the system expands into a multi-lane highway, allowing multiple processes to run simultaneously, reducing congestion and improving speed. Just as traffic signals optimize vehicle flow, concurrency ensures efficient resource allocation, keeping integrations running smoothly without unnecessary delays.

Managing concurrency is critical for high-volume data processing. The right configuration speeds up data synchronization, prevents API throttling, and ensures consistent performance. Poorly managed concurrency, on the other hand, leads to delays, resource limits, and missed SLAs.

Here, we’ll explore how Celigo’s tools help you configure, monitor, and scale concurrency settings to keep your integrations fast, efficient, and reliable—even under heavy workloads.

Key benefits of concurrency management

- Enhanced performance and speed

Processing tasks in parallel significantly reduces workflow completion times.- Example: Syncing 10,000 records with concurrency set to five threads can be five times faster than processing one record at a time.

- Improved scalability

Concurrency ensures systems can handle growing data volumes without performance degradation, adapting to the demands of scaling businesses. - Reduced latency for time-sensitive workflows

Processes like inventory synchronization or payment processing benefit from concurrency by minimizing delays and meeting real-time requirements. - Optimized resource utilization

Concurrency ensures efficient use of system resources, minimizing idle time and maximizing throughput. - Support for complex workflows

Concurrent processing enables businesses to handle interdependent tasks, such as syncing parent and child records, without bottlenecks. - Compliance with API governance

Configuring concurrency appropriately helps avoid exceeding API rate limits, prevent throttling errors, and maintain consistent system performance.

Examples of concurrency in an iPaaS

- Ecommerce order processing

Concurrency allows multiple customer orders to be processed simultaneously, syncing with an ERP system in parallel. This prevents bottlenecks and ensures timely order fulfillment, even during peak sales periods. - Real-time inventory updates

Concurrency enables parallel API calls across multiple platforms (ERP, marketplaces, and warehouse management systems), significantly reducing the time required to update stock levels in real-time and preventing overselling and discrepancies. - Employee onboarding automation

When a new employee is hired, concurrency allows multiple onboarding tasks (such as account creation, payroll setup, and software access provisioning) to be processed simultaneously across different systems, accelerating the onboarding experience and reducing manual delays.

Core principles of concurrency in iPaaS

- Parallel process execution

Execute multiple workflows or tasks simultaneously while efficiently managing resources to prevent contention and performance degradation. - Dynamic workload scaling

Automatically scale workloads up or down in response to demand, leveraging cloud-based architectures to optimize resource allocation for concurrent processes. - Error management and resilience

Isolate and handle errors within concurrent processes independently, preventing failures from cascading and ensuring uninterrupted workflow execution. - Throughput and latency optimization

Maximize data throughput while minimizing latency to maintain high-speed, efficient processing, even under heavy concurrent workloads.

Best practices for managing large data volumes

Optimize flow design

- Partition data intelligently: Break down large datasets into logical segments (e.g., geographic regions, product categories) to enable parallel processing and reduce load on individual API calls.

- Use prebuilt templates: Leverage Celigo’s preconfigured templates to streamline integration setup for common use cases.

- Example: Partitioning order data by region (e.g., North America, EMEA, APAC) helps distribute processing load, reduces API throttling risks, and improves overall runtime efficiency.

Configure concurrency settings effectively

Tailor concurrency settings to align with system capacity and API rate limits to optimize performance.

Steps to configure:

- Review API rate limits and thresholds: Check API documentation for rate limits, batch processing capabilities, and recommended concurrency settings.

- Set connection concurrency: Configure concurrency levels to match the API’s processing capacity without exceeding limits.

- Validate in a sandbox: Test configurations in a non-production environment to prevent disruptions in live operations.

Enable delta data processing

Delta processing improves efficiency by syncing only modified or newly created records, reducing API usage and processing time.

How to configure:

- Create a filter: Define conditions to extract only records updated since the last successful sync.

- Activate delta processing: Enable delta settings in the flow configuration to track and process incremental changes.

- Validate results: Compare synced records against source logs to confirm accuracy.

Monitor and scale dynamically

- Use Celigo’s monitoring tools to track key performance metrics such as execution time, error rates, and throughput.

- Scale runtime resources dynamically based on data volume fluctuations, ensuring smooth operations during peak loads.

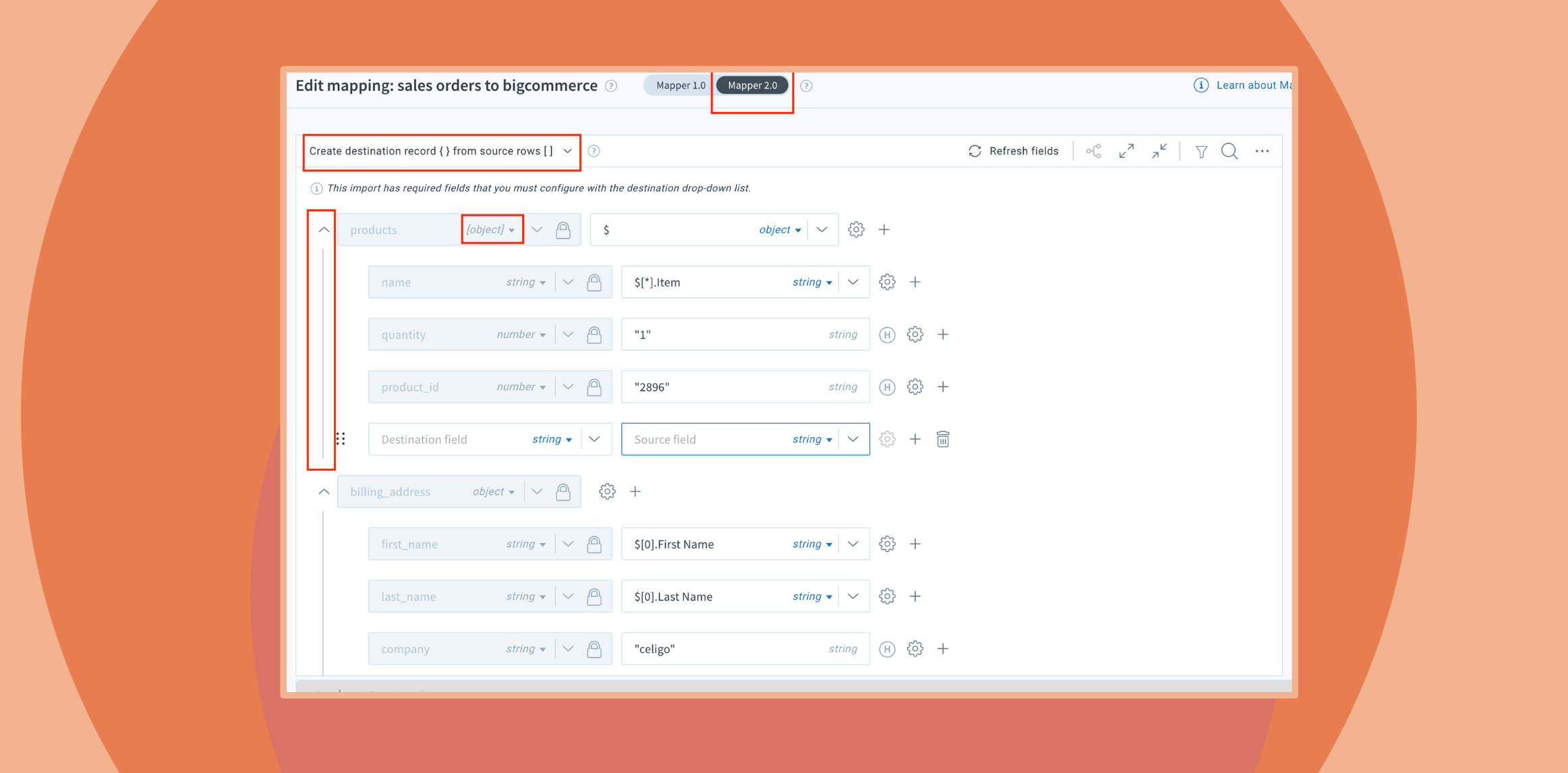

Leverage parallel flows

- Running datasets through multiple concurrent flows reduces runtime while maintaining system stability.

- Example: Instead of processing all inventory data in a single flow, split it by product categories (e.g., electronics, apparel) to prevent bottlenecks and improve processing speed.

Celigo’s approach to concurrency

Celigo provides powerful tools to configure, monitor, and scale workflows, delivering reliable performance even under high-volume demands.

Key features include:

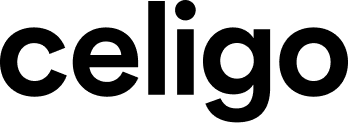

Dynamic paging and parallel processing

- What it does: Divides large datasets into smaller, manageable chunks for parallel execution, reducing processing time and system strain.

- Benefits: Optimizes API efficiency, minimizes load on target systems, and improves data throughput.

- Example: Adjusting Salesforce SOQL query page sizes to 200–1000 records balances performance while preventing timeouts and API rate limit violations.

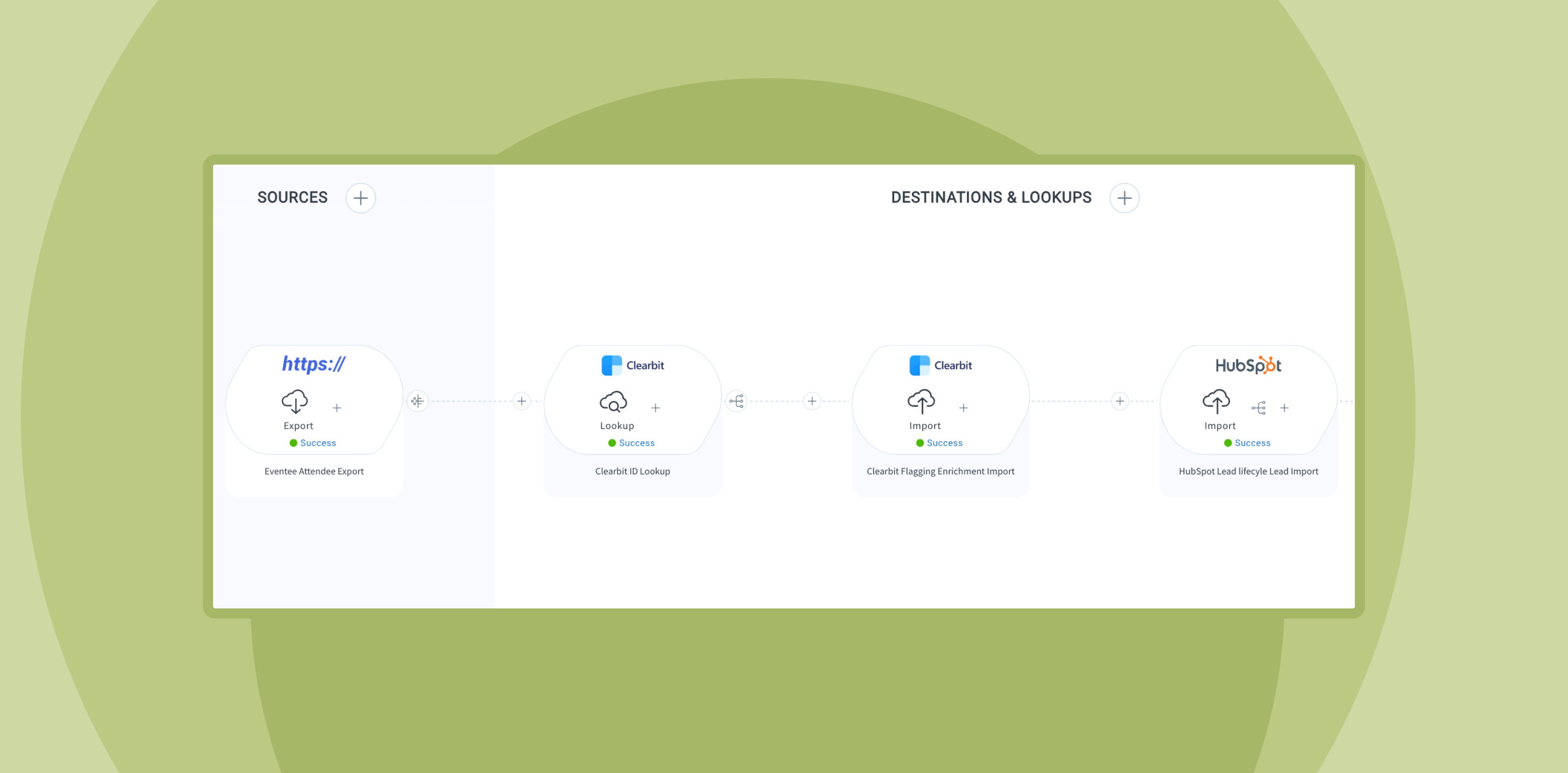

AI-powered optimizations

- Predictive monitoring: AI continuously analyzes historical and real-time data to detect anomalies and suggest proactive adjustments, preventing issues like API throttling.

- Example: If API usage nears rate limits, Celigo recommends reducing thread counts or increasing throttle delays to maintain smooth processing.

- Dynamic scaling insights: AI optimizes key settings such as page sizes, thread counts, and throttle delays based on actual workload patterns, ensuring workflows automatically adapt to peak loads.

Built-in exception management

- Automated retries: Resolves transient errors (e.g., timeouts, temporary API outages) without manual intervention.

- Example: A network outage triggers automatic retries, preventing workflow disruptions.

- Detailed error logs: Captures persistent issues (e.g., invalid credentials, permission errors) for manual review.

- Tagging and assignment: Classifies exceptions by type and assigns them to the appropriate team members for faster resolution.

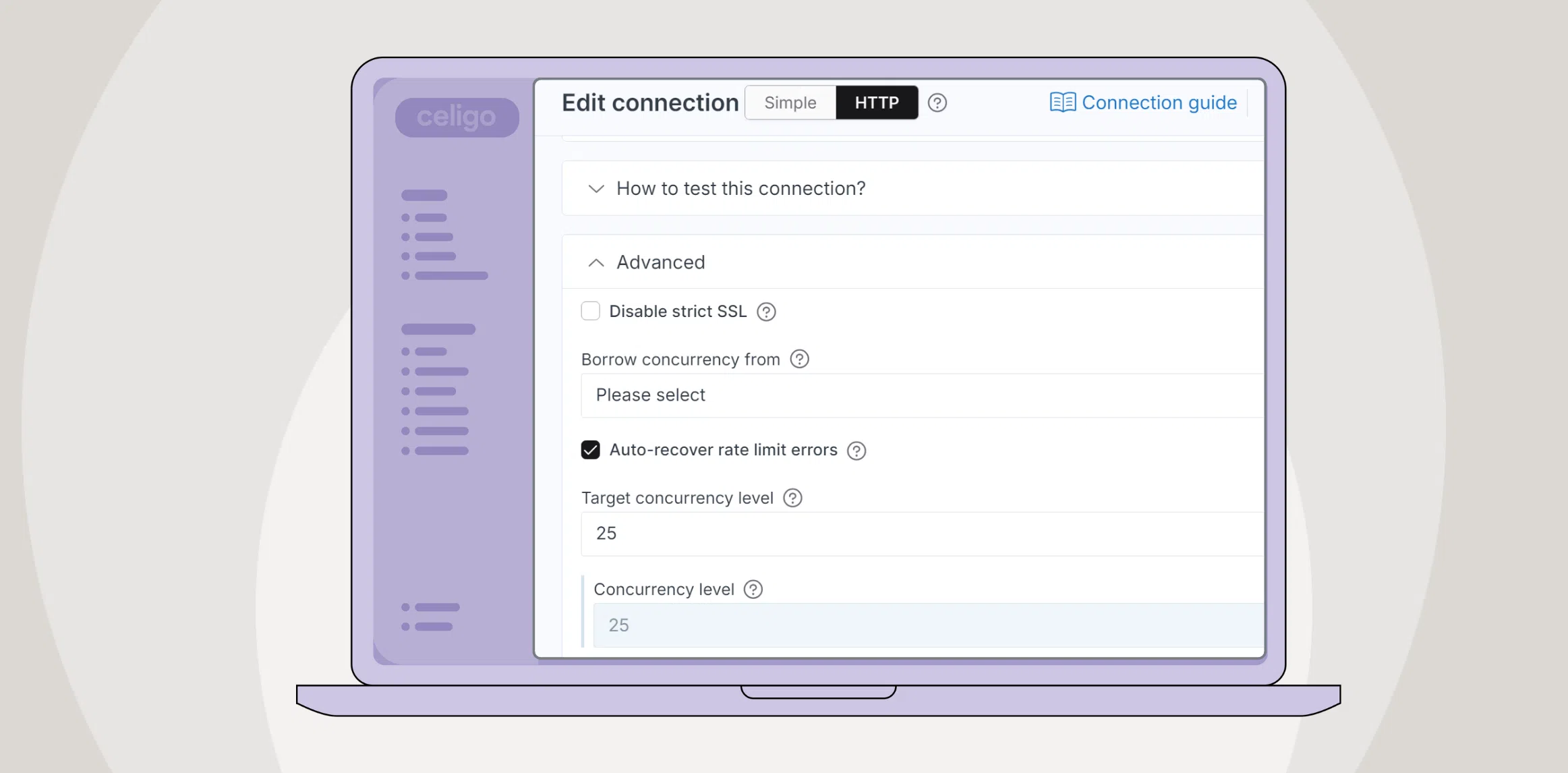

Connection-level concurrency controls

- What it does: Configures concurrent API requests to maximize throughput while preventing throttling.

- Example: If an API has a 60-requests-per-minute limit, Celigo can limit concurrency to 5 requests with a 1-second throttle delay, ensuring efficient and compliant execution.

Preserving record order

For workflows requiring sequential data processing, Celigo’s Preserve Record Order feature ensures data integrity, making it ideal for:

- Financial transactions (e.g., invoice processing)

- Parent-child relationships (e.g., hierarchical customer or product data)

Elastic scalability

- What it does: Celigo’s cloud-native infrastructure dynamically scales to handle workload spikes, ensuring consistent performance without manual intervention.

- Powered by:

- Amazon SQS – Efficient message queuing for parallel processing

- Amazon S3 – Secure and scalable storage for large datasets

- MongoDB – High-performance data persistence for fast retrieval

Recommended concurrency settings for common scenarios

| Scenario | Recommended Settings | Notes |

| APIs with strict rate limits | Concurrency: 5 requests

Throttle Delay: 1 second |

Ensure the total number of requests does not exceed API rate limits. |

| High-latency APIs | Page Size: 50–100 records

Concurrency: 2–3 threads |

Smaller page sizes reduce payload size and avoid timeouts caused by slow responses. |

| Low-latency, high-capacity APIs | Page Size: 1000+ records

Concurrency: 5–10 threads |

Larger pages and higher concurrency leverage system capacity for faster data processing. |

| Data requiring sequential processing | Concurrency: 1 thread

Enable Preserve Record Order |

Ensures data is processed in the correct order. |

| Dynamic workloads | Enable Dynamic Scaling

Monitor using dashboards |

Adjust concurrency dynamically during peak loads based on real-time metrics. |

| Error-prone APIs | Enable Automated Retries Retry Delay: 1–5 seconds |

Configure retries to handle transient errors like timeouts or temporary unavailability. |

Customization may be required based on the specific API or workload, and regular monitoring is essential for maintaining optimal performance.

Beyond performance tuning, proactive error management helps detect and address potential issues before they escalate. Automated alerts can notify teams of problems, such as exceeding API rate limits or connection failures due to high request volumes, allowing immediate action to prevent disruptions.

Celigo’s concurrency roadmap

Celigo continues to innovate in concurrency management, ensuring your workflows stay efficient, scalable, and ready for the demands of tomorrow.

Here’s a look at what’s on the horizon:

1. Data Steward Mobile App

The upcoming Data Steward Mobile App puts real-time workflow control in your hands, enabling you to manage and resolve concurrency-related issues anytime, anywhere.

Key Features:

- Push notifications: Get real-time alerts for critical errors or performance bottlenecks.

- Exception resolution: Tag, categorize, and resolve issues directly from your mobile device.

- Quick actions: Retry failed processes and adjust concurrency settings to minimize disruptions on the go.

2. Advanced data ingestion capabilities

Celigo is enhancing its platform to support complex, high-volume data pipelines with greater flexibility and efficiency.

Planned enhancements:

- Multi-stage transformations: Process and transform datasets in granular stages for precise control over workflows.

- Optimized bulk operations: Handle large-scale data transfers with improved speed and reduced overhead.

- Edge case handling: Automate the detection and resolution of anomalies to ensure your pipelines remain robust and reliable.

3. AI-driven concurrency optimization

Celigo is harnessing the power of AI to simplify and enhance concurrency management with intelligent, proactive tools.

Future AI innovations:

- Predictive scaling: Automatically adjust concurrency settings based on real-time workload trends and historical usage patterns.

- Error prediction: Identify potential issues before they occur, with actionable recommendations to avoid disruptions.

- Configuration suggestions: Leverage AI insights to fine-tune settings like page sizes, thread counts, and throttle delays for peak performance.

These innovations are built to enhance workflow efficiency, support seamless scalability, and address evolving data integration challenges with precision and reliability.

Maximize throughput and keep data flowing

Effective concurrency management is essential for optimizing large data volume processing in an iPaaS. By configuring parallel execution, leveraging AI-driven optimizations, and implementing robust exception handling, organizations can achieve higher throughput, reduced latency, and seamless scalability.

By following these best practices, teams can ensure smooth, efficient, and resilient integrations—even under the most demanding data conditions.

Additional concurrency resources

Integration insights

Expand your knowledge on all things integration and automation. Discover expert guidance, tips, and best practices with these resources.