Published Jul 3, 2024

Flow building 101: Gathering integration requirements

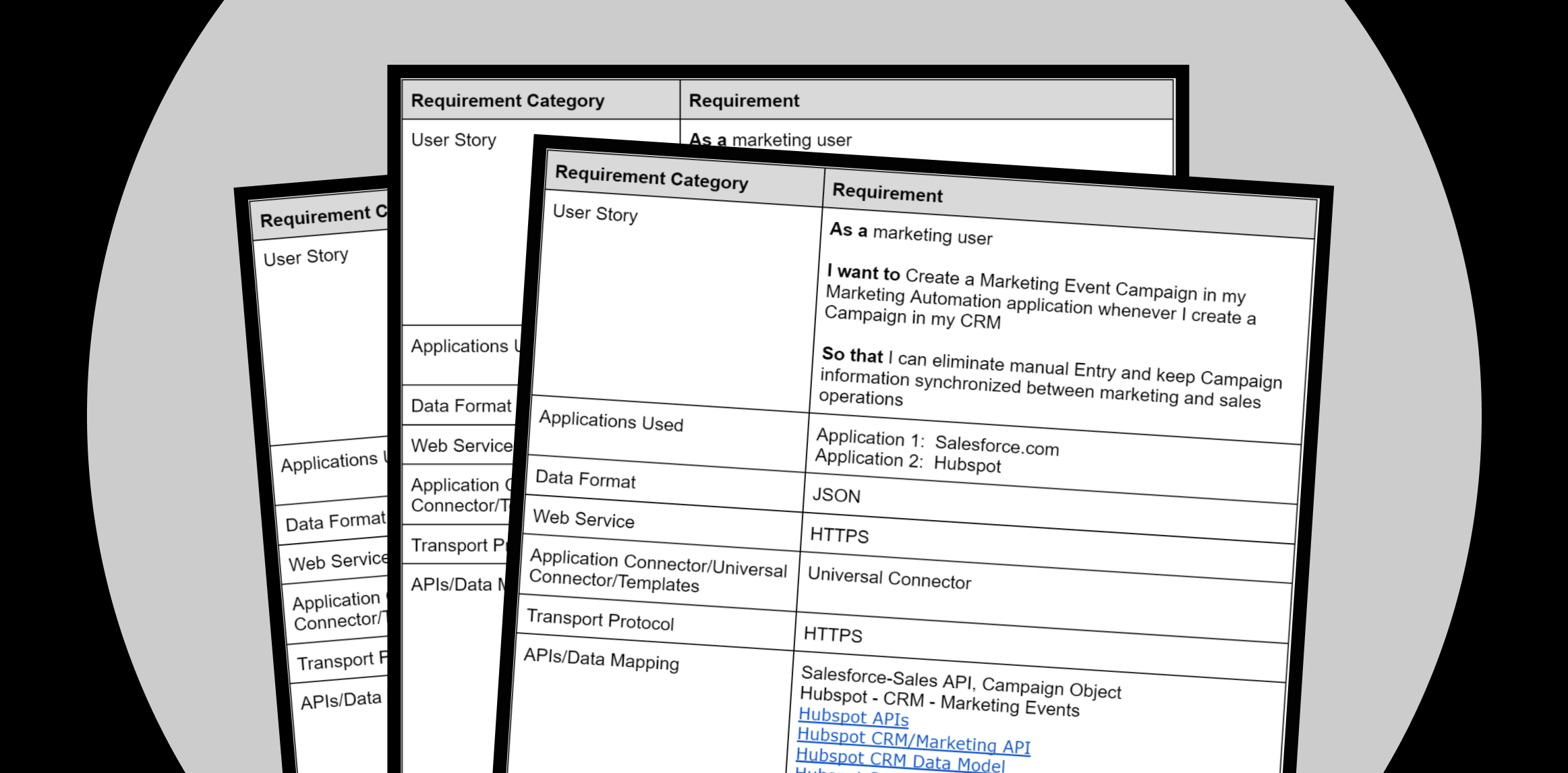

Creating a flow means you are trying to solve a business problem faced either by yourself or by someone with whom you work. For example, you may be in marketing operations, and you routinely create Event Campaigns in Salesforce and then re-key the same information into Hubspot as a Marketing Event.

You’d like to eliminate the double data entry. How do you do that in the Celigo Platform, integrator.io?

When analyzing this business process, you’re essentially capturing requirements. At Celigo, we advocate for a simple yet effective method of gathering requirements inspired by the story structure from Mike Cohn, a Scrum Alliance co-founder and experienced Scrum Master.

This method is easy to follow and suitable for everyone, whether you’re an integration architect or a DIY enthusiast.

Get our requirements Requirements Document to assist you before you begin the integration process.

Let’s break it down.

What’s your story?

The Scrum Alliance recommends creating a user story structure for requirements where there is an actor, a business function the actor wants to perform, and a business reason the actor wants to perform it, for example:

As a __________ (type of actor)

I want to _______ (business function)

So that _________ (business reason)

In our example as a marketing ops user, we would break down the story like so:

| As a | I want to | So that |

| Marketing Operations User | Create a Marketing Event Campaign in my Marketing Automation application whenever I create a Campaign in my CRM | I can eliminate manual Entry and keep Campaign information synchronized between marketing and sales operations |

Now that you have established the actor, the business function, and the business reason for your flow, you can build out the requirements for your flow by answering a set of questions.

When you put your storyline together with the answer to your requirements questions, you’ve got a blueprint requirements document to create your flow.

Create the storyline

What applications do you plan to synchronize?

Identify the applications that you will use and what you plan to synchronize or transform.

| Source application | Target application | Synchronization |

|---|---|---|

| Salesforce CRM | Hubspot | When a Campaign in Salesforce is created, create a Marketing Event in HubSpot with the same name. |

What type of connection do you need?

Celigo comes with hundreds of application connectors that can jumpstart your integration. When building your requirements, we recommend carefully reviewing the application connector documentation.

If the out-of-the-box connector doesn’t have all the endpoints needed, you can create a Universal Connection.

| Connection type | Description |

|---|---|

| Application | Prebuilt connectors for cloud-based applications like Salesforce, NetSuite, and Zendesk. Application Connectors save time for end-users as they include commonly used resources, endpoints, and query parameters (import, export |

| Database | Connectors for connecting to various database platforms like MySQL, Oracle, and SQL Server. |

| Universal | Universal Connectors allow you to authenticate with HTTP-based platforms, FTP servers, AS2 platforms, GraphQL platforms, or wrappers. Use a universal connector if no application connector exists for the platform you are connecting to or if you need access to APIs or functionality in addition to the prebuilt connector’s endpoints. |

| On-premise | Connectors are used to establish connections with on-premise applications and data sources that reside within your internal network. |

You can also explore using prebuilt templates (available at no charge) on the Integration Marketplace. Celigo templates act as pre-configured workflows that automate connection definition, data mapping, data transformation, and data integration between various applications.

What format will the data be in?

A data format is a specific way of arranging and storing data so that a computer program can understand it.

In our flow, the data format will be JSON, a popular format for web APIs.

| Data format | Description | Purpose |

|---|---|---|

| CSV comma-separated values | Plain text file where data is separated by commas. | Widely used for exchanging simple tabular data between different applications. |

| CSV

Integrator DIY produced |

Similar to standard CSV, but may contain variations or extensions specific to a particular integration tool. | Enables data exchange within a specific integration platform but may not be universally compatible. |

| Database/SQL | Structured data managed by a software application. | Provides efficient storage, retrieval, manipulation, and analysis of large datasets. |

| EDI

Electronic data interchange |

Structured format for exchanging business documents like invoices or purchase orders. | Streamlines communication between different business systems. |

| Fixed width | Flat file where each data element has a predefined width (number of characters). | Ensures consistent data alignment, which is useful for older systems or batch processing. |

| Flat file | Any text file with a basic structure, often delimited by tabs or spaces. | Simple way to store and share data, but can be less organized than other formats. |

| JSON (JavaScript Object Notation) | Human-readable format using key-value pairs to represent data structures. | Popular for data exchange in web APIs due to its lightweight nature and ease of use. |

| XML

Extensible markup language |

Flexible, text-based format using tags to define data structure. | Widely used for data exchange due to its platform independence and ease of parsing. |

What web service will you use?

A web service is a software application that exposes functionality over the web using standardized protocols and data formats. It allows applications to communicate and exchange data with each other in a programmatic way, regardless of the underlying programming languages or platforms they are built on.

In our example, we will be using the most popular format, a REST-based web service.

| Data format | Description | Purpose |

|---|---|---|

| REST-based Web Services | Architectural style for creating web APIs that use HTTP requests and responses (GET, POST, PUT, DELETE) to access and manipulate data. RESTful web services typically expose resources through URLs, and clients interact with those resources using the appropriate HTTP methods. For instance, a RESTful API might allow retrieving product information by sending a GET request to a specific URL like /products/123.

|

Modern and flexible approach for web service communication, popular for its simplicity and scalability. |

| SOAP-based Web Services | Protocol for exchanging information between applications using XML messages. | Legacy method for web service communication, but can be complex to implement. |

What transport protocol will be used?

A transport protocol is a communication language that computers use to ensure that data is delivered accurately and efficiently. There are many different transport protocols. Transport protocols are essential for all sorts of network communication, from browsing the web to sending emails to streaming videos. They help to ensure that our data gets where it needs to go quickly and reliably.

In our flow, we’ll be using HTTPS.

| Transport protocol | Description | Purpose |

|---|---|---|

| SFTP

Secure file transfer protocol |

Secure version of FTP that uses SSH (Secure Shell) for secure file transfer. SSH is a cryptographic network protocol for operating network services securely over an unsecured network. | Securely transfer files between computers over a network. SFTP authenticates users and encrypts both data and control commands during transfer. |

| FTPS

File transfer protocol secure |

FTPS extends FTP with TLS/SSL (Transport Layer Security/Secure Sockets Layer) for encryption. SSL is the predecessor to TLS, and both provide secure communication by encrypting data between applications running on different computers. | Securely transfer files between computers over a network, similar to SFTP but uses a different encryption method. FTPS uses a separate TLS/SSL channel to encrypt data transferred during the FTP session. |

| HTTP(S)

Hypertext transfer protocol (secure) |

The foundation of web communication, HTTPS adds a secure layer (SSL/TLS) for encryption. SSL/TLS are cryptographic protocols that provide secure communication between two applications on a network. | Transfer data over the web, including web pages, images, and other resources. HTTPS is the secure version of HTTP, ensuring that data is encrypted in transit between a browser and a web server. |

| AS2

Application-specific messaging protocol |

A secure protocol is designed specifically for exchanging business data between organizations. | Exchange business data, such as invoices or purchase orders, reliably and securely between different companies. AS2 uses digital signatures and encryption to ensure data integrity, non-repudiation, and security during transfers. |

What authentication method?

The most common authentication method is OAuth2.0, but other options are available and should be included in your requirements gathering.

| Category | Description |

|---|---|

| Basic | Sends username and password encoded in Base64 format within the Authorization header. |

| Token | Uses a pre-obtained token for authentication, eliminating the need for username and password during each request. |

| OAuth 1.0 | Enables integrator.io to access an HTTP service using OAuth 1.0 protocol. Allows choosing a signature method for the request. |

| OAuth 2.0 | Leverages OAuth 2.0 framework for integrator.io to obtain limited access to an HTTP service on your behalf. Scope defines the level of access granted. |

| Cookie | Integrator.io includes cookies containing authentication information in the request. |

| Digest | Integrator.io includes an Authorization header with an MD5 hash calculated using credentials and other fields according to RFC2617. |

| WSSE | Integrator.io includes an X-WSSE header based on the Web Services Security (WSS) specification for authentication. |

| Custom | Provides flexibility for APIs with custom authentication methods not covered by the standard options. |

What will trigger the integration and how often will the integration run?

Triggers are the sparks that ignite your integration flows. They define when an automation kicks off, ensuring your data flows and tasks run smoothly.

Let’s break down the different types of trigger frequencies:

- Real-time triggers: Real-time triggers fire the flow immediately after the triggering event occurs. For instance, a new customer placing an order triggers an email confirmation to be sent right away.

- Scheduled triggers: In a scheduled trigger, define a specific time or interval (daily, hourly, etc.), and the flow runs based on that schedule. For example, an inventory report might be automatically generated and sent every Sunday evening.

- Batch triggers: Batch triggers accumulate a set number of events (a “batch”) before activating the flow. This is useful for processing large volumes of data more efficiently. For example, instead of constantly sending individual orders from an ecommerce system, a batch trigger might wait until 100 orders occur, then send a single consolidated batch file to an ERP.

- Webhooks and Listeners: Proactive triggers

- Webhooks: Webhooks are a way for external applications to proactively notify the iPaaS when something specific happens (like a new order placed). The iPaaS then listens for these incoming notifications and triggers the appropriate flow.

- Listeners: These are constantly open channels. The iPaaS actively monitors a specific source (like a database) for changes. When a relevant event occurs (e.g., new data added), the listener detects it and triggers the flow.

Choosing the right trigger

The best trigger frequency depends on your needs. Real-time is ideal for critical actions, while scheduled triggers suit routine tasks. Batch triggers are perfect for handling large datasets without overwhelming the system. Webhooks and Listeners provide a proactive approach to external events or continuous monitoring.

In our example, we synchronize Marketing Events in Hubspot and Campaigns in Salesforce nightly at 9 pm ET.

What APIs and data models will be referenced?

Understanding the APIs available and the data structure of the applications that you plan to synchronize will help you build your flow. Synchronizing data can be tricky because different applications will name objects differently, and you need to understand the nuances.

For example, in one system, an object that stores information about companies is called “Organizations”; in another system, it’s called “Accounts,” and in a third system, it’s called “Companies.”

We recommend that you review the APIs of the source applications, ERDs, and data models for the flows you build.

In our example, the following APIs and data modules are referenced.

| Application | Type |

|---|---|

| Hubspot CRM Data Model | Data Model |

| Salesforce Object Reference | Data Model |

| Salesforce Data Model | Data Model |

| Hubspot APIs | API |

| Hubspot CRM/Marketing API | API |

| Hubspot Scopes | API |

| Salesforce APIs | API |

iPaaS requirements business rules

Each set of flows will require thinking through business rules that must be identified, reviewed, and decided upon (perhaps within a requirements session conducted with business users).

This type of session is often called a “requirements workshop.” Our advice is to keep it simple — focus on one flow at a time and be prepared to listen, iterate, document, and listen some more. The discovery of business processes and rules is often a bit messy as it requires understanding how systems are used cross-functionally. It will involve “people, processes, and technology,” with people being the key factor in explaining what needs to be done.

Common considerations to review in a requirements workshop are listed below.

Directionality and source of truth

- Data flow direction: Determine which systems will be the source of truth for specific data elements. This clarifies whether updates flow in one direction (unidirectional) or both directions (bidirectional) between systems.

- Conflict resolution strategy: For bidirectional flows, define how conflicts will be resolved if the same data element is updated in both systems simultaneously. Will the latest update win, or will there be a more complex validation process?

- Error handling: Establish how errors during data transfer will be handled. Should retries be attempted? Will notifications be sent to admins in case of failures?

Primary Identifier

- Unique identifier selection: Identify a common field or combination of fields that uniquely identifies each data element across all connected systems. This ensures data synchronization accuracy.

- Identifier maintenance: Discuss how these identifiers will be maintained. Will a central system manage them, or will each system be responsible for generating unique IDs?

Orchestrating and transforming data

- Data mapping: Define how data elements from one system should map to another. This may involve identifying corresponding fields, handling missing values, and applying data formatting (e.g., converting dates and currency).

- Data aggregation and summarization: It may be advantageous to combine data from multiple sources and transform it into summarized formats (e.g., totals, averages) for further analysis.

- Integration flow (event trigger) – what is the sequence of events for data transfer? This includes specifying triggers (e.g., new record creation) and the actions to be performed (e.g., data creation or update in the target application).

- Data enrichment: Data can be incorporated from external sources (e.g., demographics, weather data) to enrich existing datasets and create a more comprehensive view.

- Data quality requirements: Establish data quality expectations by defining rules to handle errors, missing values, and inconsistencies. This may involve setting thresholds, defining correction methods, or establishing rejection criteria for bad data.

Other common requirements

- Data security: Define the security protocols for data exchange between systems. This includes authentication, authorization, and encryption methods to ensure data privacy and integrity.

- Performance: Discuss the expected data volume and frequency of updates. This helps determine the scalability and performance needs.

- Auditing and compliance: Discuss any auditing or compliance requirements that the flows need to meet. This might involve data lineage tracking or adherence to specific industry regulations such as HIPAA and masked financial information

- Scalability and future needs: Consider the potential for future growth and integration with additional systems. The flow should make business sense and be adaptable to accommodate evolving business needs.

Additional tips:

- Document everything: Clearly document all agreed-upon business rules to ensure everyone involved in the integration process is on the same page.

Gathering requirements for well-planned integrations

Capturing requirements is essential when analyzing business processes. At Celigo, we advocate for a simple yet effective method of gathering requirements. By adopting this approach, you can ensure that your integrations are well-planned, meet user needs, and are primed for success.

As we continue to innovate and refine our methods, embracing such straightforward and inclusive techniques will be key to driving successful integration projects and achieving business excellence.